Watching this week’s I/O livestream I came away somewhat awestruck by Google’s vision.

Not surprisingly, it’s ambitious and bold. Gone are the days of talking about tablets and phones. So meh. Today it’s all about neural networks and machine learning and augmented and mixed reality. So ?.

I hope I’m not alone in feeling that this was one of the more complex keynotes at the annual conference for developers. A times it felt like sitting in on a first year university engineering class.

The big picture seems to be either H.G. Wells utopia or Orwellian dystopia.

Given that we all have laptops, tablets and smartphones — ones that are “good enough” to last several years, and not need regular upgrades — it would make sense that the big platforms players like Amazon, Microsoft, Apple, and Google turn their attention to building the equivalent of the man behind the curtain. That all knowing, all seeing super being. All that data we provide back to the mothership is being used to build the future. And, ironically, we’re not only doing it just for free. By buying stuff like Google Home and Amazon Alexa we’re actually paying for the privilege to arm the information super powers.

Google CEO Sundar Pichai, in a relaxed manner, described the idea of “training” neural networks, and then in turn employing “inferring” algorithms that can be used by a machine to, for example, determine the difference between a cat or a dog and surface the appropriate image to an app. I admit, it was heady stuff, and I didn’t really understand the details. The big picture, though, seems to be either H.G. Wells utopia or Orwellian dystopia. Or maybe, as my Canadian side would suggest, somewhere in between.

Imagine then, the idea of neural networks building their own neural networks. With enough computing power what would a machine learning-based future look like? What if computers could replicate themselves, learn from their failings, improve their ability to analyze data, and, ultimately, become more powerful, more accurate, more all-knowing? And you thought a $6 million bionic man was pretty impressive. I’ll see your Duran Duran greatest hits CD and raise you 6 million petabytes.

Part of me also wonders: Why can’t Google just focus on some plebeian things too? Like reducing the memory hog that has become Google Chrome?

Ideas like these at I/O 2017 made past year’s look like the stuff of Lego. Google Glass? Old school. We are talking about BIG ideas, BIG visions, BIG everything.

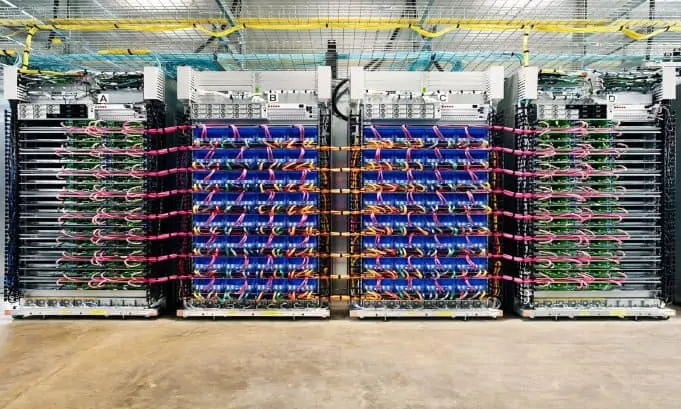

So big that Google even unveiled a new chip to power artificial intelligence cloud applications. It’s called TPU. I have no idea why. I do know Amazon will at some point have something to say about that. Armies of data centers ready to do cloud AI battle at the ready, sir.

In the short term it would appear the genesis of these projects will appear in “assistant” devices. Google Home and Amazon Alexa are the two front-runners currently, with the latter selling like the mutant child of a pet rock and cabbage patch doll. We issue voice commands. The cloud interprets, analyzes. And then we get information in return. Order a pizza. Turn off lights. Play trivia games. Check the weather. Yes, we’ve been able to do all this stuff before. But we’re entering an era where the impact the machines have on our lives is becoming ever so blurred. Before, we had to pull out our smartphones or laptops to interact. Now we just speak into the void. The machines are becoming more omnipresent.

It’s not lost on me, however, that grandiose visions also accompany grandiose economies.

I recall circa 2000, when I was at Cisco, we were all about the Economy 2.0. It was different. Like last time? No, different. This time it’s different. Oh! There was the pre-Economy 2.0 and there was the post-Economy 2.0 that, for some reason, entailed more trips to Las Vegas. Much of that hype eventually did come true (i.e. Amazon), despite the huge crash that followed so many parties, so many “red hot” IPOs as rated by Red Herring magazine. So part of me wonders if we’re balancing on the edge of a cliff.

Part of me also wonders: Why can’t Google just focus on some plebeian things too? Like reducing the memory hog that has become Google Chrome (regardless, still my favorite browser)? Or making notifications work properly on Android Wear 2.0? You know, throw us a bone.

If in 2018 or 2019 we’re talking, again, about basic tablets and smartphones and laptops at I/O — what’s machine learning? — then I suspect our grand aspirations might be on hold for a bit. But that will only be temporary. Because when machines start learning from themselves and building themselves at scale. Well, I can’t even imagine…